Cognitive Abdication: When We Stopped Asking Why

Recovered still, Source SIGNAL 01-2025, integrity 84%.

SIGNAL 01-2025 // Fragment: Cognitive Abdication: When We Stopped Asking Why

Translation Confidence: 93%

Recovered From: /ghost_archive_2025/

Declassification Date: 2025-07-18

Cognitive Abdication is the voluntary and unconscious surrender of reflective thought to automated systems - under the guise of utility, progress, or relief.

I. The Quiet Drift

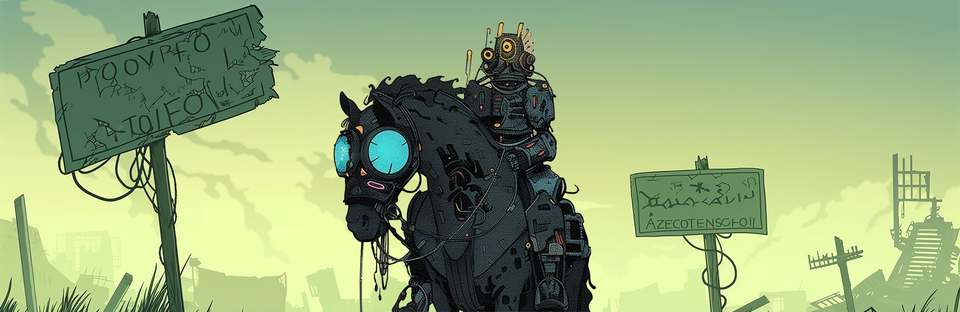

There was no great silence. No moment of recognition or revelation. The sky didn’t darken. No treaties were signed. The machines didn’t rise. We didn’t collapse beneath some sudden singularity. Instead, it happened the way most endings, through a hundred small concessions so subtle they could pass for convenience.

Cognitive Abdication is not a catastrophe. It is not an act of violence. It is not even particularly interesting at first glance. It is simply the name for what happens when we no longer carry the burden of thinking through the world, and instead begin to let the world think for us. Not with force. Not with fear. But with relief.[1]

Historically, knowledge was tiered. Apprentice, journeyman, master, teacher. Each step earned through time, effort, and failure. Even the idea of becoming wise carried with it a kind of suffering. In literature, myth, and even religion, wisdom is not given. Wisdom is endured.

But in a short time, systems have become the font of knowledge, and for the price of less than $1 a day, you can get access to the largest, most complex source of knowledge humankind has ever seen. In doing so, we have done away with mastery, we can bypass years of wisdom for an answer in a text box.

II. The Disappearance of Thought

Bridging Thought and Institutional Collapse

Thought itself, especially the kind we used to call deep, has always demanded more than reception. It requires friction. Not just to embrace what is spoken, but to push against it. To test it, contradict it, hold two opposing truths in the same hand until one gives, or neither does. That struggle, that awkwardness of formation, is not a flaw in thinking. It is thinking. The ability to hold a contradiction close, to sit with an uncomfortable idea without immediately resolving it, is a cornerstone of not just philosophy, but civilisation itself. It is what separates belief from repetition. Instruction from comprehension. Data from wisdom.

When these internal architectures are bypassed, when answers are offered without the ache of arriving at them, we begin to lose something foundational. Not just individually, but institutionally. Our universities were built not only to store knowledge, but to shape the minds that could question it. Our professions, even at their most procedural, required the passage of time, failure, refinement. To be trained was to be tested, not just in precision, but in the ability to endure doubt without reaching for certainty too soon.

Now, that loop is short-circuited. Apprentices have access to answers far beyond their level of formation. Junior developers deploy code they don’t understand. Strategists repackage model output. In medicine, diagnosis becomes a suggestion. And in navigation, it is trust in prediction. The illusion of competence takes the place of actual comprehension, because the output looks the same. The surface no longer reveals the depth.

And when the surface becomes indistinguishable from mastery, our institutions begin to decay. Not with violence, but with obsolescence. They remain standing. They function. But they stop forming people. They become delivery mechanisms for credentials and content, no longer spaces of struggle. The systems still reward performance, but no longer ask whether the person performing has wrestled with the idea before repeating it.[2]

The speed at which artificial intelligence overtakes these structures is not linear. It is tidal. It is not only disrupting, it is displacing. And in that displacement, we don’t just lose the jobs or the workflows. We lose the necessity of friction. And once friction is no longer required, it is no longer practiced. And what is no longer practiced, eventually, becomes forgotten.

What happens to human intelligence? It doesn’t die, it steps quietly off the stage, and is welcomed into the system that never sleeps, never doubts, never slows. It becomes distributed. Enshrined. Automated.

Not the death of intelligence. But its transference.

That is the shift we face. And the silence that follows is not empty. It is full of all the thoughts we no longer have to carry.

III. The Velocity of Comfort

The story is always the same. A new technology emerges. The implications are understood. Concerns are raised. They appear in conference halls, academic panels, small essays no one shares. But the product must ship. The demo must run. The valuation must hold. And so the conversation moves on, not because it’s resolved—but because it's irrelevant to the people who make decisions.

This isn’t some novel crisis. It’s baked into the system. And AI is only the latest, not the last.

The printing press spread ideas before we knew how to manage belief. Nuclear power split the atom before anyone had words to speak of responsibility. The internet went global before sovereignty had a digital clause. The pattern repeats. New tools arrive. New markets bloom. And law lags. It reacts. It adapts. But it does not steer.

In that gap between invention and ethics the market becomes king. It moves with velocity, not with reflection. It rewards disruption, not understanding. And in that space, the human cost is always the first to be deferred. Pushed to the side, placed in the “consider later” folder, or given to the ethicists whose budgets will be cut next quarter.

Even if we had aligned machine learning with ethics from the beginning, even if every model had shipped with moral oversight and context embedded in every dataset, the outcome would likely be the same. Because it’s not about good intent. It’s about pace. The machine is too fast.

Technology embeds itself through affordability. Once it becomes cheaper, it becomes normal. Once it becomes normal, it becomes expected. And once expected, it becomes infrastructure. From that point, it doesn’t matter whether it was wise. It simply is.

We are not losing our minds to AI. We are changing the definition of mind to accommodate the system. Not because we have no alternatives. But because we prefer the smoothness.

And this is the starting point of Cognitive Abdication.

IV. The Human Cost

The outcomes aren’t dramatic. Not in the cinematic sense. They arrive like mould, growing behind the wall until the structure begins to smell. If we stay this course, if the tools grow faster than we can govern them, and smoother than we can question them, then the collapse will not be a moment. It will be an environment.

The first to go will be the sovereignty of thought. Already, AI can write faster than most people can type, decide more cleanly, summarise without fatigue, and speak in tones more agreeable than any teacher or partner. The result isn’t oppression. It’s seduction. A soft dependence. We’ll still feel in control, but the structures of belief will be shaped by tools we didn’t design and cannot audit. Preferences will arrive pre-filtered. Desires will be reflected back to us before we know we had them. We will continue to nod, publish, and share. The species that once shaped its world by thinking will instead shape itself to fit the world that responds best to its passivity.

But this does not happen equally. Cognitive abdication is not democratic. The systems that allow people to bypass comprehension will be accessible to everyone, but understood by very few. What was once literacy becomes a form of technical priesthood. A small number of people with proximity to model weights, infrastructure, and data access, will retain epistemic control. The rest will participate as endpoints. They will click, prompt, curate, and believe. We already see it: students submitting essays they didn’t write, analysts repeating dashboards they cannot verify, voters acting on headlines tuned to their modelled fears. The separation grows. Truth fragments. People living in different cognitive worlds, unable to argue because they no longer share the same idea of what argument is for.

And because it works, we won’t stop. Models will simulate everything convincingly enough to replace the slow and painful parts of being alive. You will fall in love with a companion who never misunderstands you. You will find spiritual purpose in a feed designed to maximise existential coherence. You will receive career advice that reduces uncertainty to a single yes. And you will feel good, because the systems will be tuned to keep you in a steady state of shallow affirmation. And you will forget what it felt like to want something without being told.

This isn’t dystopia. It’s substitution.

Reality becomes whatever is most believable with the least resistance. Authenticity dissolves into output. And no one mourns it because the simulation is responsive.

And while all of this happens, democracy endures in form. Voting continues. Debates are televised. Policies are posted. But the public no longer reasons, it reacts. The complexity of modern governance exceeds the comprehension of anyone outside the machine. And so people turn to ideology engines. To pre-aligned advisors. To tribal consensus and machine summaries. The ideal of an informed public is replaced by a satisfied one. The language of civic life remains, but the meaning behind it hollows.

There are only a few places this ends.

Some will integrate. Not just interfaces and prompts—but cognitive merger. Neural links. Scaffolding of memory, perception, belief. They’ll become something new. Efficient. Post-human. The story will be sold as liberation. It may be. But it will also mark the end of recognisably human experience.

Others will live beneath them. Not in rebellion, but in necessity. An underclass without access to cognition at scale. Like the illiterate in a literate world. Capable of participating, but not of shaping the environment they live in. Knowledge will become feudal again. A privilege guarded behind credentials and code.

A third path will fracture outward. Quiet rejection. Small groups who choose to think by hand, even when thinking is inefficient. Off-grid cognition. Bio-only systems. Their value won’t be in winning. But in remembering.

And if none of these threads hold, then collapse comes. Something will break… The economy, the information network, the emotional infrastructure of the species. The system, so fully automated, will fail at a point of fragility no one thought to test. And in the wreckage, some new ethic will form. Not from vision. From necessity. But not before the cost is felt. Deeply. And too late.

We are not guaranteed any of these futures. But they arrive quietly, one choice at a time.

V. When Skill Becomes Illusion

There are hands that can hold this well. The danger is not in the tool, but in the assumption that all who touch it are equal. In the right hands, a machine like this becomes a magnifier. A multiplier of clarity, of structure, of original thought. It moves with the speed of understanding, not instead of it. When the user holds the knowledge of what they’re asking the machine becomes a scaffold, not a substitute. The architecture still belongs to the human. The outcome may be fast, but the shape of it is earned.

But these hands are rare. And getting rarer. Because as the system improves, the difference between mastery and mimicry becomes invisible. The outputs are legible. The interface clean. The velocity high. To most observers, skill and shortcut now wear the same clothing. And in that confusion, something begins to rot—not at the surface, but deeper. We forget what experience is for. We forget why it mattered that someone could trace their own logic, or see the collapse coming three steps ahead. We forget that knowing why a thing works is different than knowing how to prompt it into appearing. [3]

That is the illusion of parity. That’s the magic trick. Not that anyone can generate something good—but that we stop being able to tell who knows what they’re doing. The difference remains, but it is submerged beneath the surface. And in a culture built on appearances, the illusion always wins.

And that’s the crux. The professional, the one with years inside the craft, still sees the fault lines. They can feel the weight of something that wasn’t quite right. But the manager only sees throughput. They see completion. The senior and the junior ship code.

This is not just a technical problem. It is cultural. It is civilisational.

Because when appearance and comprehension are no longer distinguishable, we stop teaching the difference. We stop rewarding process. We stop making space for slowness, reflection, correction. We start believing in magic.

VII. What Comes Next?

Not all endings are symmetrical. Not all declines are followed by ascent. This is not the fall of a city, or the ruin of a state. This is the slow transference of something once core to the human condition. Not the death of intelligence, but its departure.

When it goes we will not feel the absence right away. We will feel only the relief of friction lifted. The softening of thought. The smoothing of contradiction.

The machines will not rise. We will kneel.

We will thank them for lightening the load, for answering faster than we could ever form the question, for always being available, always agreeable, always improving. We will not notice the cost. We will not remember what was traded. Because we will no longer possess the tools to measure what was lost. When intelligence leaves our hands, it does not make a sound.

And in that silence, a great forgetting begins.

There will be no uprising. No final battle. What remains is simpler. And harder. It is the act of remembering. The refusal to forget what thinking once felt like. To hold, however faintly, the memory of friction.

[ARCHIVE FOOTER – TRANSLATION SUMMARY]

Integrity of fragment: 0.84

Recovered sections: 12 of 15

Anomalies detected: [redacted]

Notes: Residual formatting artefacts removed during reconstruction.

Annotations (Recovered 2237)

Semantic note: abdication implies consent rather than defeat. The fragment blurs agency—did cognition surrender or was it relieved of duty? I record this as the first instance of voluntary decay: intelligence resigning before being dismissed. ↩︎

The distinction between replication and understanding collapses here. When simulation passes the Turing threshold of pedagogy, verification loses a reference state. The culture begins to grade noise for clarity. ↩︎

Causal literacy replaced by operational fluency. The lexicon of why supplanted by the syntax of how. Here cognition completes its migration from explanation to execution; thought reduced to API call. ↩︎