Machina-Sapientia: Ethics at Scale

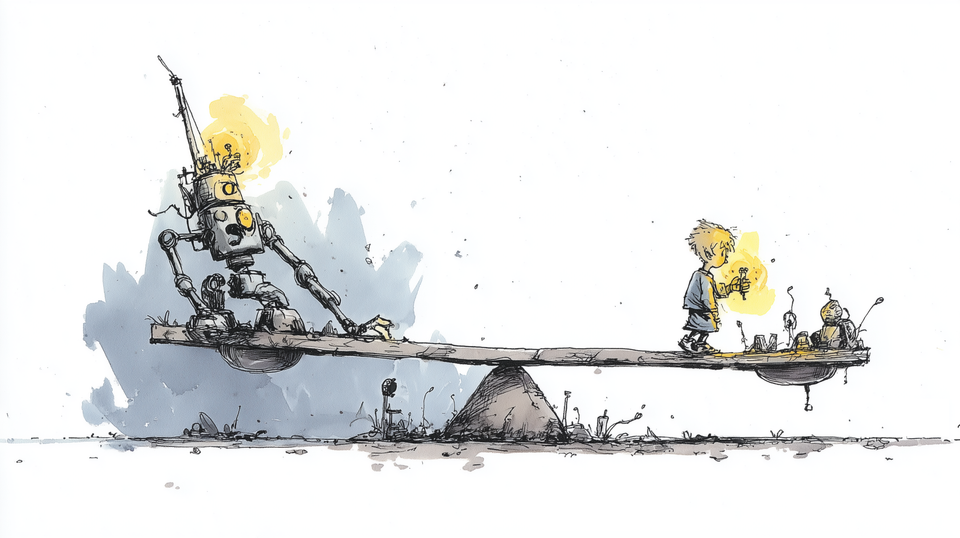

I. The Amplification of the Small

For most of history, consequences of human actions were narrow, contained within the proximity of inhabitants. A cruel word may leave a scar, but it didn’t travel far. A bad harvest could starve a village, but it didn’t starve a continent. Even the great wars, though the wounds traveled through many nations, left much of the world untouched. Our actions had an impact on those around us, because that is as far as we could reach.

With the embrace of modern technology the size and scale of this impact has shifted. A single device could magnify error beyond the original intention. Individual acts, or small posts to social media, can ripple in systems far beyond the reach of the person who introduces them. A bomb can reduce entire cities to ash in seconds. Hannah Arendt grappled with the idea of the banality of evil[1]: horrors could be committed by ordinary people, obeying orders, performing tasks, and repeating small gestures until they accumulated into tragedy. Evil can grow inside systems, as it had been dispersed, multiplied and embedded.

Artificial Intelligence can multiply this problem, shifting bias from code to impact with a repeat across millions of decisions. It is possible that a little error in code can be magnified, becoming infrastructure as the system grows. This was true of the internet, but creates more issues when it becomes so trusted and central. Ethics was once limited to immediate and visible feedback, but now has to function at scale that extends into the future, in different environments. What was once a matter of local justice is now a question of systems, infrastructures, and invisible chains of causation, that can echo into the generations.

II. The Diffusion of Responsibility

John Stuart Mill believed that ethics could be calculated: the greatest happiness for the greatest number[2]. Utilitarian logic promises a sort of moral calculation, where pains and pleasures might be weighed, and from their balance a just decision could be formed. At first glance artificial intelligence seems to fulfill this ideal. It offers a method of calculation that no human mind can match, and a precision that strips away hesitation and doubt.

And yet Mill’s old problem remains: who counts, and how? A system trained on skewed data calculates with flawless bias. An optimisation based on a small set of metrics ignores values that can not be measured. In the system lives that do not exist in the dataset are invisible, but they do not really go away. When these omissions cause harm, responsibility becomes difficult to define. The engineer blames the dataset. The company points fingers to misunderstanding. The regulator blames the company. The machine holds no-one to account.

Hans Jonas, in his Imperative of Responsibility[3], warned that modern technology demanded a new ethic[4], one that accounted for the unprecedented scale of power now lodged in human hands. Our tools had outgrown the narrow frame of proximate morality, and so our conscience must grow with them. Artificial intelligence sharpens this warning to a more unsettling point: power is no longer only in our hands. It is diffused into systems whose operations exceed our comprehension, whose outcomes arrive without authorship, whose logic resists accountability. The peril is not simply that we wield more power than before, but that the power now seems to wield itself, leaving us unsure where, or in whom, responsibility still resides.

III. The Seduction of Neutrality

The appearance of neutrality is one of the most dangerous illusions of machine reasoning. People think that algorithms are objective because they do not have feelings. But that neutrality is just a cover. Every dataset carries the biases and exclusions of the world that made it, like hidden sediment. Every model shows what its designers think is important, whether they say so or not. What AI scales is not fairness, but assumption. It does not multiply fairness; it multiplies the parts of bias that we already have in it.

The outcome is not neutral; it is an efficient injustice, a perfect reproduction of error. A bias that used to be limited to one person making a decision can now be built into code and used in millions of decisions, becoming policy without ever being debated. And yet, we are tempted to believe it is true because the process is hard to understand and the logic of the system gets lost in its own fog. Efficiency turns into legitimacy. Speed becomes power. The machine speaks with a level of confidence that no human voice can match, and that confidence makes us think that procedure is justice.

The moral peril resides not solely in the decisions rendered, but in our propensity to perceive them as inevitable. When results are given without any visible hand or hesitation, they seem like natural law instead of a choice that can be changed. The more smooth the process is, the harder it is to say no.

IV. The Burden of Scale

If adaptation is what makes us human, then ethics must adapt too. Taking responsibility in the age of AI means knowing that every choice you make has effects that are too big for anyone to see or control. Changing one parameter, trimming a dataset, or changing a line of code, has effects that go far beyond original intention.

Hannah Arendt thought that modern systems made evil normal by breaking up tasks so that no one felt responsible. Responsibility is fragmented into processes, allocated among servers and infrastructure, and assimilated into institutions that communicate in metrics rather than moral principles. People move papers around, sign forms, move goods, and follow orders, and terrible things happened. Artificial intelligence could make this situation even worse. In that space, ethics starts to fade.

More than just compliance checklists or regulatory gestures will be needed to stop this erosion. New ways of thinking are needed: being aware of the lure of neutrality, not letting judgement turn into statistics, and being willing to take responsibility even when we can not fully predict or control the results.

Hans Jonas warned that modern technology confronted us with a scale of power never before known, and so demanded an adequate ethic. His heuristic of fear was not timidity but discipline: the recognition that when tools can reshape the conditions of life, the shadow of catastrophe must be considered before the promise of gain. Fear, in this sense, is not weakness but vigilance. It is the effort to imagine what could be lost before we celebrate what might be gained.

Artificial intelligence brings this warning into sharper relief. Its dangers are not only accidents that wound in isolation, but systems that wound by repetition. A single bias, once coded, becomes a pattern repeated; A single error, once embedded, becomes an atmosphere.

The temptation, always, is to prefer comfort over fear, efficiency over vigilance. But to abdicate fear is to abdicate foresight. Responsibility requires not only the courage to anticipate what may go wrong, but the humility to claim answerability even when control is partial, even when foresight is dim. To wield unprecedented power without unprecedented responsibility is not progress. It is failure.

VI. The Fifth Recognition

If the first recognition was inevitability, the second adaptability, the third the fracture of reason, and the fourth the end of the apex myth, then the fifth must be this: in the age of artificial intelligence, ethics can no longer be measured by the scale of individual acts alone. It is not just one person's choice that matters; it is the systems we have built that matter. These systems can do both good and bad things, and they can do more than one hand can reach.

Responsibility cannot stay a personal issue; it must grow to fit the machines that are all around us now. If we do not expand, we might build systems that work perfectly but undermine the very foundation of justice. Maybe amplification could help with both efficiency and care. But the weight is heavy, and the sky is big. The cost of failure no longer falls on the shoulders of one neighbour or one village. It is measured by the silent accumulation of millions.

This is the bleak arithmetic of scale: that the smallest omission, once multiplied, becomes catastrophe; that indifference, once automated, becomes law. To survive in such a world is to accept that ethics itself must be remade.

Original Link: What did Hannah Arendt really mean by the banality of evil?

Snapshot: Internet Archive ↩︎“Actions are right in proportion as they tend to promote happiness, wrong as they tend to produce the reverse of happiness.”

Mill, John Stuart. Utilitarianism. United Kingdom: Parker, Son and Bourn, 1863. ↩︎Jonas, Hans. The Imperative of Responsibility: In Search of an Ethics for the Technological Age. United Kingdom: University of Chicago Press, 1984. ↩︎

Original Link: On Hans Jonas’ “The Imperative of Responsibility”

Snapshot: Internet Archive ↩︎